During the coding on Python web applications using Flask too lazy to install some additional services (web server and so on) on the local PC. It would be good just to run Colab in the Google cloud and get access to a locally running web server by fixed subdomain name.

Web requests can be tunneled to web applications behind the NAT using different services. The most popular is ngrok. The real free alternative is localtunnel.

Ngrok for tunneling requests to web applications in Colab

The simplest way to tunneling web server ran on the local IP addresses Colab to the external Internet – to use popular service ngrok.

Variant 1.

!pip install flask-ngrok

from flask import Flask

from flask_ngrok import run_with_ngrok

app = Flask(__name__)

run_with_ngrok(app)

@app.route("/test")

def home():

return "<h1>GFG is great platform to learn</h1>"

app.run()After running the code we wil get te result like:

* Serving Flask app "__main__" (lazy loading) * Environment: production WARNING: This is a development server. Do not use it in a production deployment. Use a production WSGI server instead. * Debug mode: off * Running on http://127.0.0.1:5000/ (Press CTRL+C to quit) * Running on http://1680e48e0f6a.ngrok.io * Traffic stats available on http://127.0.0.1:4040

In the line “Running on http://1680e48e0f6a.ngrok.io” – the specified URL accessable outside Colab.

Variant 2.

The libriary flask_ngrok is quite old and it doesn’t support some important ngrork commandslike auth parameters and subdomain name.

Subdomain is very important parameter to run server with fixed domain name, not as 1680e48e0f6a.ngrok.io, but like test20210701.ngrok.io. The domain name should be unique, of course.

We will use libriary pyngrok to run ngrok with needed parameters.

import os

import threading

!pip install pyngrok

from flask import Flask

from pyngrok import ngrok

os.environ["FLASK_ENV"] = "development"

app = Flask(__name__)

port = 5000

#Setting an auth token allows us to open multiple tunnels at the same time

ngrok.set_auth_token("<YOUR_AUTH_TOKEN>")

# Open a ngrok tunnel to the HTTP server

public_url = ngrok.connect(port).public_url

print(" * ngrok tunnel \"{}\" -> \"http://127.0.0.1:{}\"".format(public_url, port))

# Update any base URLs to use the public ngrok URL

app.config["BASE_URL"] = public_url

# Define Flask routes

@app.route("/")

def index():

return "Hello from Colab!"

# Start the Flask server in a new thread

threading.Thread(target=app.run, kwargs={"use_reloader": False}).start()After running the code we will get the response like:

* ngrok tunnel "http://ae7250c89181.ngrok.io" -> "http://127.0.0.1:5000" * Serving Flask app "__main__" (lazy loading) * Environment: development * Debug mode: on

We can specify subdomain in the ngrok parameter:

public_url = ngrok.connect(subdomain="foo20210701")

and get the error like “Only paid plans may bind custom subdomains.\nFailed to bind the custom subdomain ‘foo20210701’ for the account ‘XXXXXX’.\nThis account is on the ‘Free’ plan.\n\nUpgrade to a paid plan at:”

The tariffs for ngork is specified here. The minimal tariff where subdomains supported costs 5 USD per month.

Running Gunicorn in Colab

Gunicorn ‘Green Unicorn’ is Python WSGI HTTP server for UNIX. Will run Gunicorn in Colab. The test Flask web script is:

def generateWebApp():

text = "from flask import Flask\r\n"\

"app = Flask(__name__)\r\n"\

"\r\n"\

"@app.route(\"/\")\r\n"\

"def hello():\r\n"\

" return \"Hello Localtunnel\"\r\n"\

"\r\n"\

"if __name__ == \"__main__\":\r\n"\

" app.run()\r\n"

#print(text)

with open('webapp.py', 'w') as f:

f.write(text)

generateWebApp()Run the Gunicorn server in Colab:

!pip install gunicorn !gunicorn --workers 2 -b localhost:8000 webapp:app

Aftre running the Gunicorn in Colab we will get the result like:

[2021-07-04 03:16:34 +0000] [332] [INFO] Starting gunicorn 20.1.0 [2021-07-04 03:16:34 +0000] [332] [INFO] Listening at: http://127.0.0.1:8000 (332) [2021-07-04 03:16:34 +0000] [332] [INFO] Using worker: sync [2021-07-04 03:16:34 +0000] [335] [INFO] Booting worker with pid: 335 [2021-07-04 03:16:34 +0000] [336] [INFO] Booting worker with pid: 336 [2021-07-04 03:23:47 +0000] [332] [INFO] Handling signal: int [2021-07-04 03:23:47 +0000] [335] [INFO] Worker exiting (pid: 335) [2021-07-04 03:23:47 +0000] [336] [INFO] Worker exiting (pid: 336) [2021-07-04 03:23:47 +0000] [332] [INFO] Shutting down: Master

Everything is ok, but the question is how to get access to the locally executed in Colab web server from ouside by some subdomain.

Executing localtunnel in Colab

To solve this task we can use localtunnel. This service allows to run subdomains for free. It’s a very useful option to develop and debug some short test tasks.

In case of our Gunicorn which is ran on port 8000 in Colab virtual machines and we need to tunneling all external requests to this server from the subdomain https://smarthome161075.loca.lt.

In the localtunnel command line must be used only LOWER CASE subdomain name.

Otherwise the tunnel doesn’t started.

#Deploy localtunnel on Colab !npm install -g localtunnel !lt --port 8000 --subdomain smarthome161075

As a result we will get the response like:

your url is: https://smarthome161075.loca.lt

Everything work ok, but as single threaded application in Colab. We need to execute the processes Gunicorn и localtunnel in Colab as background (multitasking) processes to work parallelly.

Multiple threads in Colab

We need that both processes Gunicorn and localtunnel were executed simultaneously as background processes in Colab and work independently each other. To solve this task I will use UNIX command nohup. E.g., to execute localtunnel as background process we need to execute the script in Colab:

!nohup lt –port 8000 –subdomain $domainName > lt.log 2>&1 &

Colab will execute localtunnel as background process. In lt.log will be all stdout messages of localtunnel. Since in this case we can’t terminate the runned process in Colab Web GUI, we need to use kill command to stop the process. Th get the PID of the executed process we need to execute the script:

!ps -ef | grep lt

And will get the result like:

root 52 1 0 03:46 ? 00:00:08 /usr/local/bin/dap_multiplexer --domain_socket_path=/tmp/debugger_bi5ptuks4 root 793 62 0 04:37 ? 00:00:00 /bin/bash -c lt --port 8000 --subdomain smarthome161075 > lt.log 2>&1 root 794 793 0 04:37 ? 00:00:00 node /tools/node/bin/lt --port 8000 --subdomain smarthome161075 root 1107 62 0 05:29 ? 00:00:00 /bin/bash -c ps -ef | grep "lt" root 1109 1107 0 05:29 ? 00:00:00 grep lt

The needed process executed with PID = 794. To terminate it forceably we can use the command:

!kill 794

Analogically, we can execute in Colab Gunicorn as a background process:

!nohup gunicorn –workers 2 -b localhost:8000 webapp:app > gunicorn.log 2>&1 &

!ps -ef | grep gunicorn

In the log file gunicorn.log we will see all needed information about running workers PIDs or we can see them in the results of -ps command:

root 360 1 0 04:06 ? 00:00:00 [gunicorn] <defunct> root 515 1 0 04:24 ? 00:00:00 [gunicorn] <defunct> root 599 1 0 04:28 ? 00:00:00 [gunicorn] <defunct> root 779 1 8 04:37 ? 00:00:00 /usr/bin/python3 /usr/local/bin/gunicorn --workers 2 -b localhost:8000 webapp:app root 785 779 3 04:37 ? 00:00:00 /usr/bin/python3 /usr/local/bin/gunicorn --workers 2 -b localhost:8000 webapp:app root 786 779 3 04:37 ? 00:00:00 /usr/bin/python3 /usr/local/bin/gunicorn --workers 2 -b localhost:8000 webapp:app root 787 62 0 04:37 ? 00:00:00 /bin/bash -c ps -ef | grep "gunicorn" root 789 787 0 04:37 ? 00:00:00 grep gunicorn

We see that the main process with PID = 779 crates two child processes (workers) with PID: 785, 786.

It’s not so easy to terminate a background processes in Colab in such a way. So, code the Python script for Colab to run the processes Gunicorn and localtunnel as background naturally.

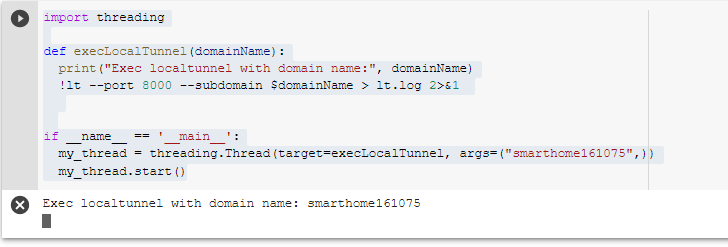

import threading

def execLocalTunnel(domainName):

print("Exec localtunnel with domain name:", domainName)

!lt --port 8000 --subdomain $domainName > lt.log 2>&1

if __name__ == '__main__':

my_thread = threading.Thread(target=execLocalTunnel, args=("smarthome161075",))

my_thread.start()In this case we can terminate executed localtunnel in Colab just pressing on the cross:

Analogically, we can execute Gunicorn in Colab as a background process:

def execGunicorn(port = 8000):

print("Exec localtunnel with port:", port)

!gunicorn --workers 2 -b localhost:$port webapp:app > gunicorn.log 2>&1 &

if __name__ == '__main__':

gunicorn_thread = threading.Thread(target=execGunicorn, args=(8000,))

gunicorn_thread.start() So, now we can execute web server and give access to it outside the Colab using fixed subdomain name.